By Reynolds Antwi AGYAPONG

The recent advancement in artificial intelligence (AI) has raised several important conversations across different areas of the human experience.

Historians such as Yuval Noah Harari have expressed worry about what the new information networks being created by AI would mean for humans’ ability to decipher truth.

What would happen as we enter a post truth society where we cannot trust anything digitally created, including one of our most definitive tests of evidence: voice and video? Would that potentially undo the progress we have made in the information communication technology domain?

Security analysts are concerned about bad actors leveraging AI to perpetuate crime at a level not yet imagined. Psychologists worry about the long-term impact it would have on the human attention span, cognitive ability and critical thinking skills, especially for the next generation who will grow up without any prior body of knowledge before their exposure to AI.

Ethicists are anxious about what it would mean to live in a world where everyone has access to ‘superhuman’ intelligence, and their agents (digital doubles) interact with one another.

Amidst all these important debates, I am inclined to think that we often miss one of the most crucial conversations of all: what these innovations mean for the global socioeconomic architecture, which has been the foundation for wealth creation (through capitalism) and social cohesion (through democracy).

In 2016 (which seems like eternity in AI history), I authored Saving Capitalism from Itself: Why It Matters. At the time, I was concerned that large-scale industrial automation could upend the middle class, which is the backbone of the capitalist economy, and with-it western democracy, as we know it.

The main counter argument then and even now often points to the complete opposite in outcomes previous such ‘prophecies of doom’ around technological advancements produced.

After all, even Socrates, who preferred dialogue as the method of knowledge dissemination was concerned, not surprisingly, that writing would negatively affect memory and learning – he was wrong.

In the 1980s, some teachers thought that the introduction of calculators in elementary schools would affect children’s mathematical abilities and vehemently protested against it – they were wrong. In the 1990s, some people felt the use of computers in business would lead to the loss of numerous white-collar jobs – it ended up creating many jobs never even anticipated.

However, this narrative ignores two fundamental errors in human reasoning: our tendency for outcome and confirmation biases. We are often quick to cherry-pick examples, which legitimize our positions and extrapolate from them.

Contrary to those widely held opinions about technology, one can also identify numerous examples of innovations, which were initially perceived to have the best outcome for humanity, but turned out to be complete disasters.

Alfred Nobel thought that his invention, the dynamite, would be used for peaceful purposes – he was wrong. The Wright brothers may have never imagined that their desire to see humans enjoy the thrill of flying would also give us the ability to drop bombs on other humans thousands of miles away. The point here is that the ultimate outcome of any invention is often appreciated in hindsight.

Nonetheless, the right foresight, through a rational model of the future, provides us with the knowledge to anticipate some of the potential downsides of these inventions so we can create the necessary tools to alleviate them. The biggest challenge when it comes to AI is the sheer speed of innovation, which makes it difficult for even experts in the field to have that right foresight.

It took 118 years from the creation of the telephone by Alexander Graham bell to the release of the first smartphone (the IBM Simon Personal Communicator in 1994) and 131 years to the first iPhone – a period long enough for humans to adapt and reinvent. Of course, some critics may argue that AI has also been long in the making since its foundation was laid more than 75 years ago when Alan Turing published his seminal paper Computing Machinery and Intelligence.

While this is fundamentally true, only a few things, if any, could rival the breakneck speed of the recent advances in AI and its adoption and integration into the mainstream of human reality. Barely 2 years ago, only a handful of people on the planet knew about large language models (LLMS) like ChatGPT, and yet for many reading this piece today life is almost impossible without them.

Given how quickly AI has been integrated into life and business, it is safe to argue that unlike previous technological innovations which empowered humans to be more productive, AI marks a significant shift as it essentially creates an ‘alternative superhuman’ – except for mobility, which is likely to be resolved soon given the recent advances made with humanoid robot development. Couple these developments with the Friedman doctrine, which makes profits the fundamental pursuit of business, and what you get is the perfect recipe for economic disaster. Here’s why:

Businesses are incentivized to increase revenue and reduce expenses. If their objective is maximization of shareholder value, then it goes without argument that any opportunity to eliminate remuneration, if it leads to the attainment of that objective would be exploited.

Even if a company were inspired by some altruism towards humans, the very tenets of market-based competition would mean a preference for machines over humans. Indeed, much of the history of capitalism has been anchored on exploring the most efficient ways to lower the cost of the factors of production – this hinge on access to the cheapest raw materials and labor.

The tendency for a choice of robots over humans also stems from some fundamental misunderstanding of how the economy works at the macro level and of the desired pursuit of individual firm objectives even if that ultimately leads to a disastrous outcome for everyone – a classic game of chicken.

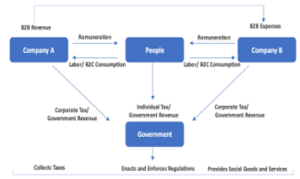

To better appreciate this view, let us consider a simplified model of the free market economy with three actors: Companies, People, and Governments. People supply labor and consume the products and services generated by companies.

Even for companies who operate purely in the business-to-business space, the ultimate beneficiary of their output are people through derived demand. As I put it, even if you produce cat food you do not market it to cats. Fundamentally speaking, the remuneration, which is classified as an expenditure item on one company’s income statement, is revenue on another’s.

Government mobilizes revenue through taxation, the bulk of which comes from people. This tax revenue is utilized in the provision of social services to keep the wheel of democracy running:

This self-perpetuating system collapses as soon as a key and arguably the most important anchor, people, are eliminated i.e. when their labor which provides access to the consumption which oils the engine of capitalism is no longer needed.

The only way to steady the system in such a situation is for government to increase corporate taxes to compensate for the loss of remuneration through some form of universal basic income.

How would that impact democracy as we know it if governments rely on a few large corporations for tax revenue and the people are dependent on their government for survival? Would it lead to the collapse of the regulatory mechanism and transition society from a democratic to a dystopian state?

Alternatively, would it create a kind of utopia where humans spend the rest of our lives in luxurious indulgence whilst humanoid robots do all the work? Given that for much of recorded history humans have partly defined the ‘meaning of life and of our existence’ through our careers, what would it mean for us to live in a world without work even if we managed to implement a perfect system of universal basic income?

Would we utilize our time, in the absence of work, in the pursuit of knowledge and of the arts? What would be the new incentives for this when we are aware that our knowledge cannot surpass that of the ‘superhuman’ robots we’ve created?

I wouldn’t pretend to have answers to these existential questions except to say we humans, through our governments, global organizations, corporate powers and thought leaders, ought to lift our heads, however briefly, from the AI frenzy and dedicate resources to explore possible solutions to these complex issues.

Failure to do so could potentially spell the doom of capitalism and with-it western democracy, given how inextricably intertwined the two are. Unfortunately for us, given the pace of the AI revolution, we are already running out of time.

The writer is an MBA candidate at Indiana University’s Kelley School of Business. He holds an MA in Economics from CERGE-EI (Charles University – Czech Academy of Sciences) and a B.Sc. in Administration from UGBS. He is the author of Timeless Tales of Tangible Truths